Google has informed developers of a vulnerability in versions (earlier than M102) of WebRTC. Impacts, workarounds, and updates can be found here.

The below features are exciting new tools we've implemented into Pixel Streaming. Though they provide new possibilities, it's important to note that these are unstable and should be used with caution. We recommend you do not build critical components of your product on them as they may change or be removed in subsequent releases of Unreal Engine.

Pixel Streaming Player

The Pixel Streaming Player allows you to display active pixel streams within your Unreal Engine project as a display within 3D space. This allows you to display cloud hosted content as a media source within local applications.

As of 5.2, the Pixel Streaming Player is currently non-functional. We are in the process of updating it to use the new AVCodecs video coding module, but these changes will not be ready until 5.3.

Setting up your Pixel Streaming Player

-

Enable the Pixel Streaming and Pixel Streaming Player plugins.

-

Create a new Blueprint class (Actor). Save and name this anything you like.

-

Open the new Blueprint class and add 2 components, PixelStreamingSignalling and PixelStreamingPeer.

-

Drag the PixelStreamingSignalling component into the event graph. Drag off from this node and create a Connect node. Connect BeginPlay to the inut of the new node and enter "ws://localhost" into the URL value.

-

Select the PixelStreamingSignalling component and add the; On Config, On Offer, and On Ice Candidate events from the Details window. Add the On Ice Candidate event from the PixelStreamingPeer node as well.

-

Drag off from the On Config (PixelStreamingSignalling) node and create a Set Config (Pixel Streaming Peer) node. Ensure the config values are connected between Set Config and On Config.

-

Drag off from the On Offer (PixelStreamingSignalling) node and create a Create Answer node. Ensure the Offer output of the On Offer node is connected to the Offer input of the Create Answer node. Drag off from the Return Value output of the Create Answer node and create a Send Answer node. Ensure it connects to the Answer input of the Send Answer node.

-

Drag off from the On Ice Candidate (PixelStreamingSignalling) node's Candidate output and create a Receive Ice Candidate node. Ensure it connects to the Candidate input of the Receive Ice Candidate node.

-

Drag off from the On Ice Candidate (PixelStreamingPeer) nodes Candidate output and create a Send Ice Candidate (PixelStreamingSignalling) node. Ensure it connects to the Candidate input of the Send Ice Candidate (PixelStreamingSignalling) node.

-

If the above is done correctly, your finished Blueprint should look like this:

-

Select the PixelStreamingPeer component in the Blueprint. In the Details window under Properties, you should see Pixel Streaming Video Sink. Select the drop down and choose Media Texture. Name and save accordingly.

-

When prompted, select and save the Video Output Media Asset.

-

Drag your Blueprint Actor into the scene. Create a simple plane object and re-size and shape it into a suitable display.

-

Drag your saved

NewMediaPlayer_Videodirectly from the content browser onto the plane in the scene. This will automatically create a Material and apply it to the object.

-

Start a basic local Pixel Stream external to this project. Start the Signalling server and run the application with relevant Pixel Streaming args.

-

Play your scene. You should now see your external Pixel Stream displayed on the plane in your scene!

VCam

VCam is a new feature that allows you to attach a VCam component to an in-scene Actor and stream the video content of the Level Viewport to an output provider.

At this stage, VCam is mostly intended for virtual production use cases. It can can be paired with the Live Link VCam iOS application and used for ARKit tracking. This is useful for piloting virtual cameras in Unreal Engine, with Pixel Streaming handling touch events and streaming the Level Viewport as a real-time video feed back to the iOS device. For more information on Live Link VCam, please head to this site here: iOS Live Link VCam

How to use VCam

-

Ensure you have the Virtual Camera plugin enabled

-

Add the VCam component to any in scene actor (in the below example it has been attached to the Pixel Streaming player blueprint created in the guide above).

-

In the VCam component, navigate to Output Providers and add Pixel Streaming Provider in the drop down. Expand the new Output section.

-

To start and stop your stream, toggle the Is Active checkbox under Output.

-

Once started, open a local browser and navigate to 127.0.0.1 to see your streamed display, or open the Live Link iOS application and navigate to 127.0.0.1 and hit connect.

If you want to interact with the stream through the browser, you will need to open the control panel in browser and change the Control Scheme to Hovering.

Use Microphone

With Pixel Streaming, you can now allow in-engine playback of a particular peer / player microphone using WebRTC audio through the web browser.

Setting up Use Microphone in Project

Making your project microphone compatible is extremely simple and only requires a single addition to your project.

-

Enable the Pixel Streaming Plugin.

-

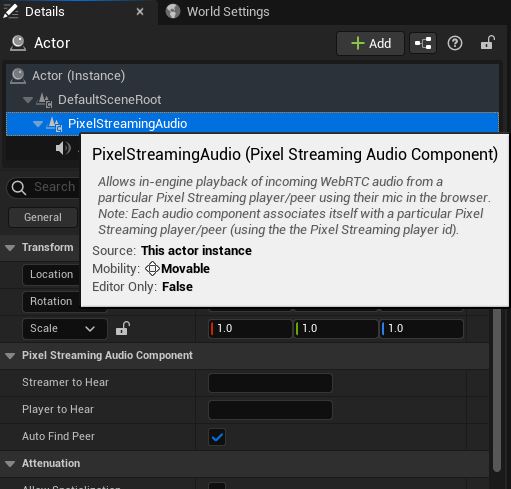

On any Actor in scene, add the

PixelStreamingAudiocomponent. You can leave its settings as the default.

Each audio component associates itself with a particular Pixel Streaming player/peer (using the the Pixel Streaming Player ID)

Using Microphone in Stream

-

Once your project is set up with the

PixelStreamingAudiocomponent, run your application as per usual for Pixel Streaming (packaged or standalone with Pixel Streaming launch args) and launch your signalling server. -

Connect to your signalling server via web browser.

-

Open the frontend settings panel and set

Use Mictotrue. Click Restart at the bottom to reconnect.

-

Your browser may ask permission to use your microphone, ensure you allow access.

-

Speak into your microphone, you should hear your voice played back through the stream!

Pixel Streaming in Virtual Reality

Virtual Reality (VR) Pixel Streaming is a new feature that provides users with the means to connect to a VR-compatible application using Pixel Streaming. This allows users to enjoy a VR experience with their own headsets, without running a local application.

Setting Up the Project

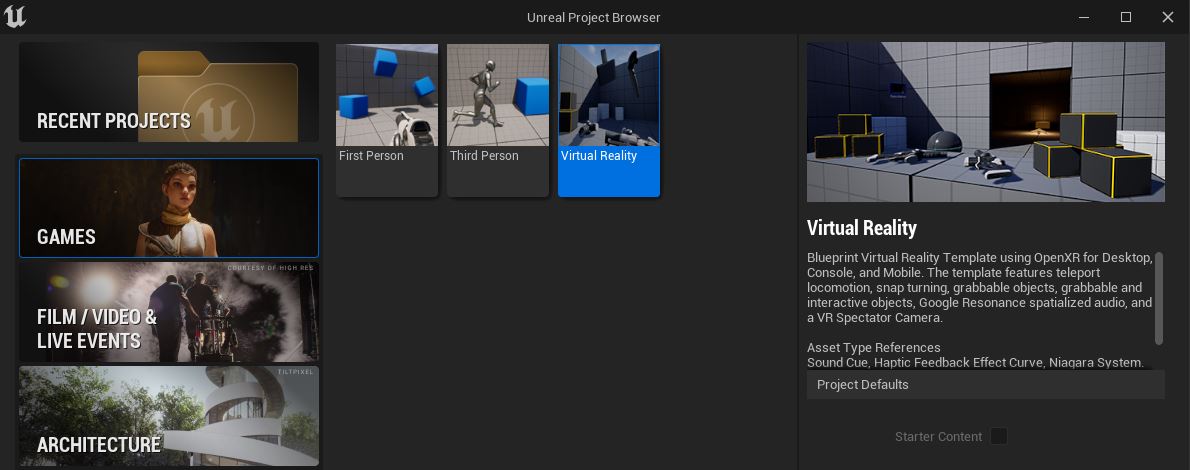

For this example, we'll use the Virtual Reality template project.

-

Create a new project using the Virtual Reality template.

-

Enable the Pixel Streaming plugin and disable the OpenXR plugin. Restart the editor.

-

In the Content Browser, search for "Asset_Guideline" and delete "B_AssetGuideline_VRTemplate". When prompted, click Force Delete.

-

Now search for "VRPawn" in the Content Browser. Double-click the VRPawn to open it, then compile the Blueprint. If working properly it should compile successfully. Save and close this Blueprint.

-

Open Editor Preferences > Level Editor > Play and add

-PixelStreamingURL=ws://127.0.0.1:8888 -PixelStreamingEnableHMD

Creating the Required Certificates

You need a HTTPS certificate to use VR with Pixel Streaming. This is due to the fact that the standard for WebXR requires that the API is only available to sites loaded over a secure connection (HTTPS). For production use, you will need to use a secure origin to support WebXR. You can find extra information on these requriements here: https://developer.oculus.com/documentation/web/port-vr-xr/#https-is-required.

For this example, we'll be setting up a basic certificate via Gitbash. If you do no have Gitbash installed prior, head to this page here for steps on how to install Gitbash: https://www.atlassian.com/git/tutorials/git-bash.

-

Create a

certificatesfolder inside theSignallingWebServerdirectory, as shown below:

-

Right click inside the

certificatesdirectory and open Gitbash. Type inopenssl req -x509 -newkey rsa:4096 -keyout client-key.pem -out client-cert.pem -sha256 -nodes.

-

Press Enter multiple times, until the command is complete. You'll know it's done when done as it will have created 2

.pemfiles in the certificates folder.

-

Open the

config.jsonfile found in theSignallingWebServerfolder, set theUseHTTPSvalue totrue.

You should now be ready to run and test your VR application!

The certificate created above is only for testing purposes. For full cloud deployment, you will need to organise a proper certificate.

Joining the VR Stream

For this example, we'll be using the Meta Quest 2.

-

Start the

Start_Signalling.ps1script found in\SignallingWebServer\platform_scripts\cmd -

Going back to the editor, run the application standalone. As you added the launch args in previous steps, it should connect to the signalling server once it's fully started up.

-

Now using your VR headset, open the web browser and enter your computers IP address. You'll be presented with a "Connection not secure" page, open the "Advanced" tab and click "Proceed to IP"

-

You should see the application streamed to two views in the browser window. Click the XR button on the left to switch to VR.

-

Done! You should now be in your Pixel Streamed VR project!